The only way to substantiate a belief is to try to disprove it.

(Irrationality: The Enemy Within, page 48)

Sutherland was 65 when he wrote this book, and nearing the end of a prestigious career in psychology research. His aim was to lay out, in 23 themed chapters, all the psychological and sociological research data from hundreds of experiments, which show just how vulnerable the human mind is to a plethora of unconscious biases, prejudices, errors, mistakes, misinterpretations and so on – the whole panoply of ways in which supposedly ‘rational’ human beings can end up making grotesque mistakes.

By the end of the book, Sutherland claims to have defined and demonstrated over 100 distinct cognitive errors humans are prone to (p.309).

I first read this book in 2000 and it made a big impact on me because I didn’t really know that this entire area of study existed, and had certainly never read such a compendium of sociology and psychology experiments before.

I found the naming of the various errors particularly powerful. They reminded me of the lists of weird and wonderful Christian heresies I was familiar with from years of of reading early Christians history. And, after all, the two have a lot in common, both being lists of ‘errors’ which the human mind can make as it falls short of a) orthodox theology and b) optimally rational thinking, the great shibboleths of the Middle Ages and of the Modern World, respectively.

Rereading Irrationality now, 20 years later, after having brought up two children, and worked in big government departments, I am a lot less shocked and amazed. I have witnessed at first hand the utter irrationality of small and medium-sized children; and I have seen so many examples of corporate conformity, the avoidance of embarrassment, unwillingness to speak up, deferral to authority, and general mismanagement in the civil service that, upon rereading the book, hardly any of it came as a surprise.

But to have all these errors so carefully named and defined and worked through in a structured way, with so many experiments giving such vivid proof of how useless humans are at even basic logic, was still very enjoyable.

What is rationality?

You can’t define irrationality without first defining what you mean by rationality:

Rational thinking is most likely to lead to the conclusion that is correct, given the information available at the time (with the obvious rider that, as new information comes to light, you should be prepared to change your mind).

Rational action is that which is most likely to achieve your goals. But in order to achieve this, you have to have clearly defined goals. Not only that but, since most people have multiple goals, you must clearly prioritise your goals.

Few people think hard about their goals and even fewer think hard about the many possible consequences of their actions. (p.129)

Cognitive biases contrasted with logical fallacies

Before proceeding it’s important to point out that there is a wholly separate subject of logical fallacies. As part of his Philosophy A-Level my son was given a useful handout with a list of about fifty logical fallacies i.e. errors in thinking. But logical fallacies are not the same as cognitive biases.

A logical fallacy stems from an error in a logical argument; it is specific and easy to identify and correct. Cognitive bias derives from deep-rooted, thought-processing errors which themselves stem from problems with memory, attention, self-awareness, mental strategy and other mental mistakes.

Cognitive biases are, in most cases, far harder to acknowledge and often very difficult to correct.

Fundamentals of irrationality

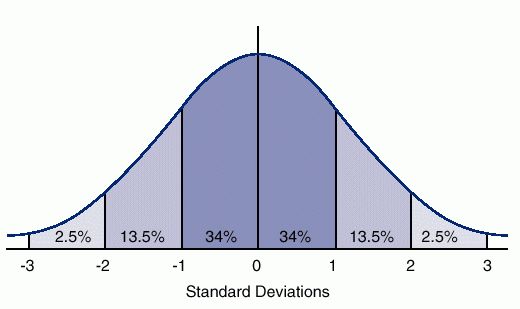

1. Innumeracy One of the largest causes of all irrational behaviour is that people by and large don’t understand statistics or maths. Thus most people are not intellectually equipped to understand the most reliable type of information available to human beings – data in the form of numbers. Instead they tend to make decisions based on a wide range of faulty and irrational psychological biases.

2. Physiology People are often influenced by physiological factors. Apart from obvious ones like tiredness or hunger, which are universally known to affect people’s cognitive abilities, there are also a) drives (direct and primal) like hunger, thirst, sex, and b) emotions (powerful but sometimes controllable) like love, jealousy, fear and – especially relevant – embarrassment, specifically, the acute reluctance to acknowledge limits to your own knowledge or that you’ve made a mistake.

At a more disruptive level, people might be alcoholics, drug addicts, or prey to a range of other obsessive behaviours, not to mention suffering from a wide range of mental illnesses or conditions which undermine any attempt at rational decision-making, such as stress, anxiety or, at the other end of the spectrum, depression and loss of interest.

3. The functional limits of consciousness Numerous experiments have shown that human beings have a limited capacity to process information. Given that people rarely have a) a sufficient understanding of the relevant statistical data to begin with, and b) lack the RAM capacity to process all the data required to make the optimum decision, it is no surprise that most of us fall back on all manner of more limited, non-statistical biases and prejudices when it comes to making decisions.

4 The wish to feel good The world is threatening, dangerous and competitive. Humans want to feel safe, secure, calm, and in control. This is fair enough, but it does mean that people have a way of blocking out any kind of information which threatens them. Most people irrationally believe that they are cleverer than they in fact are, are qualified in areas of activity of knowledge where they aren’t, people stick to bad decisions for fear of being embarrassed or humiliated, and for the same reason reject new evidence which contradicts their position.

Named types of error and bias

Jumping to conclusions

Sutherland tricks the reader on page one, by asking a series of questions and then pointing out that, if you tried to answer about half of them, you are a fool since the questions didn’t contain enough information to arrive at any sort of solution. Jumping to conclusions before we have enough evidence is a basic and universal error. One way round this is to habitually use a pen and paper to set out the pros and cons of any decision, which also helps highlight areas where you realise you don’t have enough information.

The availability error

All the evidence is that the conscious mind can only hold a small number of data or impressions at any one time (near the end of the book, Sutherland claims the maximum is seven items, p.319). Many errors are due to people reaching for the most available explanation, using the first thing that comes to mind, and not taking the time to investigate further and make a proper, rational survey of the information.

Many experiments show that you can unconsciously bias people by planting ideas, words or images in their minds which then directly affect decisions they take hours later about supposedly unconnected issues.

Studies show that doctors who have seen a run of a certain condition among their patients become more likely to diagnose it in new patients, who don’t have it. Because the erroneous diagnosis is more ‘available’.

The news media is hard-wired to publicise shocking and startling stories which leads to the permanent misleading of the reading public. One tourist eaten by a shark in Australia eclipses the fact that you are far more likely to die in a car crash than be eaten by a shark.

Thus ‘availability’ is also affected by impact or prominence. Experimenters read out a list of men and women to two groups without telling them that there are exactly 25 men and 25 women, and asked them to guess the ratio of the sexes. If the list included some famous men, the group was influenced to think there were more men, if the list included famous women, the group thought there are more women than men. The prominence effect.

The entire advertising industry is based on the availability error in the way it invents straplines, catchphrases and jingles designed to pop to the front of your mind when you consider any type of product, making those products – in other words – super available.

I liked the attribution of the well-known fact that retailers price goods at just under the nearest pound, to the availability error. Most of us find £5.95 much more attractive than £6. It’s because we only process the initial 5, the first digit. It is more available.

Numerous studies have shown that the availability error is hugely increased under stress. Under stressful situations – in an accident – people fixate on the first solution that comes to mind and refuse to budge.

The primacy effect

First impressions. Interviewers make up their minds about a candidate for a job in the first minute of an interview and then spend the rest of the time collecting data to confirm that first impression.

The anchor effect

In picking a number people tend to choose one close to any number they’ve recently been presented with. Two groups were asked to estimate whether the population of Turkey was a) bigger than 5 million b) less than 65 million, and what it was. The group who’d had 5 million planted in their mind hovered around 15 million, the group who’d had 65 million hovered around 35 million. They were both wrong. It is 80 million.

The halo effect

People extrapolate the nature of the whole from just one quality e.g. in tests, people think attractive people must be above average in personality and intelligence although, of course, there is no reason why they should be. Hence this error’s alternative name, the ‘physical attractiveness stereotype’. The halo effect is fundamental to advertising, which seeks to associate images of beautiful men, women, smiling children, sunlit countryside etc with the product being marketed.

The existence of the halo effect and primacy effect are both reasons why interviews are a poor way to assess candidates for jobs or places.

The devil effect

Opposite of the above: extrapolating from negative appearances to the whole. This is why it’s important to dress smartly for an interview or court appearance, it really does influence people. In an experiment examiners were given identical answers, but some in terrible handwriting, some in beautifully clear handwriting. The samples with clear handwriting consistently scored higher marks, despite the identical factual content of the scripts.

Illusory correlation

People find links between disparate phenomena which simply don’t exist, thus:

- people exaggerate the qualities of people or things which stand out from their environments

- people associate rare qualities with rare things

This explains a good deal of racial prejudice: a) immigrants stand out b) a handful of immigrants commit egregious behaviour – therefore it is a classic example of illusory correlation to associate the two. What is missing is taking into account all the negative examples i.e. the millions of immigrants who make no egregious behaviour and whose inclusion would give you a more accurate statistical picture. Pay attention to negative cases.

Stereotypes

- People tend to notice anything which supports their existing opinions.

- We notice the actions of ‘minorities’ much more than the actions of the invisible majority.

Projection

People project onto neutral phenomena, patterns and meanings they are familiar with or which bolster their beliefs. This is compounded by –

Obstinacy

Sticking to personal opinions (often made in haste / first impressions / despite all evidence to the contrary) aka The boomerang effect When someone’s opinions are challenged, they just become more obstinate about it. Aka Belief persistence. Aka pig-headedness. And this is axacerbated by –

Group think

People associate with others like themselves, which makes them feel safe by a) confirming their beliefs and b) letting them hide in a crowd. Experiments have shown how people in self-supporting groups are liable to become more extreme in their views. Also – and I’ve seen this myself – groups will take decisions that almost everyone in the group, as individuals, know to be wrong – but no-one is prepared to risk the embarrassment or humiliation of pointing it out. The Emperor’s New Clothes. Groups are more likely to make irrational decisions than individuals are.

Confirmation bias

The tendency to search for, interpret, favour, and recall information in a way that confirms one’s pre-existing beliefs or hypotheses. In an experiment people were read out a series of statements about a named person, who had a stated profession and then two adjectives describing them, one that you’d expect, the other less predictable. ‘Carol, a librarian, is attractive and serious’. When asked to do a quiz at the end of the session, participants showed a marked tendency to remember the expected adjective, and forget the unexpected one. Everyone remembered that the air stewardess was ‘attractive’ but remembered the librarian for being ‘serious’.

We remember what we expect to hear. (p.76)

Or: we remember what we remember in line with pre-existing habits of thought, values etc.

We marry people who share our opinions, we have friends with people who share our opinions, we agree with everyone in our circle on Facebook.

Self-serving biases

When things go well, people take the credit, when things go badly, people blame external circumstances.

Avoiding embarrassment

People obey, especially in a group situation, bad orders because they don’t want to stick out. People go along with bad decisions because they don’t want to stick out. People don’t want to admit they’ve made a mistake, in front of others, or even to themselves.

Avoiding humiliation

People are reluctant to admit mistakes in front of others. And rather than make a mistake in front of others, people would rather keep quiet and say nothing (in a meeting situation) or do nothing, if everyone else is doing nothing (in an action situation). Both of these avoidances feed into –

Obedience

The Milgram experiment proved that people will carry out any kind of atrocity for an authoritative man in a white coat. All of his students agreed to inflict life-threatening levels of electric shock on the victim, supposedly wired up in the next door room and emitting blood curdling (faked) screams of pain. 72% of Senior House Officers wouldn’t question the decision of a consultant, even if they thought he was wrong.

Conformity

Everyone else is saying or doing it, so you say or do it so as not to stick out / risk ridicule.

Obedience is behaving in a way ordered by an authority figure. Conformity is behaving in a way dictated by your peers.

The wrong length lines experiment

You’re put in a room with half a dozen stooges, and shown a piece of card with a line on it and then another piece of card with three lines of different length on it, and asked which of the lines on card B is the same length as the line on card A. To your amazement, everyone else in the room chooses a line which is obviously wildly wrong. In experiments up to 75% of people in this situation go along with the crowd and choose the line which they are sure, can see and know is wrong – because everyone else did.

Sunk costs fallacy

The belief that you have to continue wasting time and money on a project because you’ve invested x amount of time and money to date. Or ‘throwing good money after bad’.

Sutherland keeps cycling round the same nexus of issues, which is that people jump to conclusions – based on availability, stereotypes, the halo and anchor effects – and then refuse to change their minds, twisting existing evidence to suit them, ignoring contradictory evidence.

Misplaced consistency & distorting the evidence

Nobody likes to admit (especially to themselves) that they are wrong. Nobody likes to admit (especially to themselves) that they are useless at taking decisions.

Our inability to acknowledge our own errors even to ourselves is one of the most fundamental causes of irrationality. (p.100)

And so:

- people consistently avoid exposing themselves to evidence that might disprove their beliefs

- on being faced with evidence that disproves their beliefs, they ignore it

- or they twist new evidence so as to confirm to their existing beliefs

- people selectively remember their own experiences, or misremember the evidence they were using at the time, in order to validate their current decisions and beliefs

- people will go to great lengths to protect their self-esteem

Sutherland says the best cleanser / solution / strategy to fixed and obstinate ideas is:

- to make the time to gather as much evidence as possible and

- to try to disprove your own position.

The best solution will be the one you have tried to demolish with all the evidence you have and still remains standing.

People tend to seek confirmation of their current hypothesis, whereas they should be trying to disconfirm it. (p.138)

Fundamental attribution error

Ascribing other people’s behaviour to their character or disposition rather than to their situation. Subjects in an experiment watched two people holding an informal quiz: the first person made up questions (based on what he knew) and asked the second person who, naturally enough, hardly got any of them right. Observers consistently credited the quizzer with higher intelligence than the answerer, completely ignoring the in-built bias of the situation, and instead ascribing the difference to character.

We are quick to personalise and blame in a bid to turn others into monolithic entities which we can then define and control – this saves time and effort, and makes us feel safer and secure – whereas the evidence is that all people are capable of a wide range of behaviours depending on the context and situation.

Once you’ve pigeon-holed someone, you will tend to notice aspects of their behaviour which confirm your view – confirmation bias and/or illusory correlation and a version of the halo/devil effect. One attribute colours your view of a more complex whole.

Actor-Observer Bias

Variation on the above: when we screw up we find all kinds of reasons in the situation to exonerate ourselves: we performed badly because we’re ill, jet-lagged, grandma died, reasons that are external to us. If someone else screws up, it is because they just are thick, lazy, useless. I.e. we think of ourselves as complex entities subject to multiple influences, and others as monolithic types.

False Consensus Effect

Over-confidence that other people think and feel like us, that our beliefs and values are the norm – in my view one of the profound cultural errors of our time.

It is a variation of the ever-present Availability Error because when we stop to think about any value or belief we will tend to conjure up images of our family and friends, maybe workmates, the guys we went to college with, and so on: in other words, the people available to memory – simply ignoring the fact that these people are a drop in the ocean of the 65 million people in the UK. See Facebubble.

The False Consensus Effect reassures us that we are normal, our values are the values, we’re the normal ones: it’s everyone else who is wrong, deluded, racist, sexist, whatever we don’t approve of.

Elsewhere, I’ve discovered some commentators naming this the Liberal fallacy:

For liberals, the correctness of their opinions – on universal health care, on Sarah Palin, on gay marriage – is self-evident. Anyone who has tried to argue the merits of such issues with liberals will surely recognize this attitude. Liberals are pleased with themselves for thinking the way they do. In their view, the way they think is the way all right-thinking people should think. Thus, ‘the liberal fallacy’: Liberals imagine that everyone should share their opinions, and if others do not, there is something wrong with them. On matters of books and movies, they may give an inch, but if people have contrary opinions on political and social matters, it follows that the fault is with the others. (Commentary magazine)

Self-Serving Bias

People tend to give themselves credit for successes but lay the blame for failures on outside causes. If the project is a success, it was all due to my hard work and leadership. If it’s a failure, it’s due to circumstances beyond my control, other people not pulling their weight etc.

Preserving one’s self-esteem

These three errors are all aspects of preserving our self-esteem. You can see why this has an important evolutionary and psychological purpose. In order to live, we must believe in ourselves, our purposes and capacities, believe our values are normal and correct, believe we make a difference, that our efforts bring results. No doubt it is a necessary belief and a collapse of confidence and self-belief can lead to depression and possibly despair. But that doesn’t make it true.

People should learn the difference between having self-belief to motivate themselves, and developing the techniques to gather the full range of evidence – including the evidence against your own opinions and beliefs – which will enable them to make correct decisions.

Representative error

People estimate the likelihood of an event by comparing it to an existing prototype / stereotype that already exists in our minds. Our prototype is what we think is the most relevant or typical example of a particular event or object. This often happens around notions of randomness: people have a notion of what randomness should look like i.e. utterly scrambled. But in fact plenty of random events or sequences arrange themselves into patterns we find meaningful. So we dismiss them as not really random. I.e. we have judged them against our preconception of what random ought to look like.

Ask a selection of people which of these three sets of six coin tosses where H stands for heads, T for tails is random.

- TTTTTT

- TTTHHH

- THHTTH

Most people will choose 3 because it feels random. But of course all three are equally likely or unlikely.

Hindsight

In numerous experiments people have been asked to predict the outcome of an event, then after the event questioned about their predictions. Most people forget their inaccurate predictions and misremember that they were accurate.

Overconfidence

Most professionals have been shown to overvalue their expertise i.e. exaggerate their success rates.

Statistics

A problem with Irrationality and with John Allen Paulos’s book about Innumeracy is that they mix up cognitive biases and statistics, Now, statistics is a completely separate and distinct area from errors of thought and cognitive biases. You can imagine someone who avoids all of the cognitive and psychological errors named above, but still makes howlers when it comes to statistics simply because they’re not very good at it.

This is because the twin areas of Probability and Statistics are absolutely fraught with difficulty. Either you have been taught the correct techniques, and understand them, and practice them regularly (and both books demonstrate that even experts make terrible mistakes in the handling of statistics and probability) or, like most of us, you have not and do not.

As Sutherland points out, most people’s knowledge of statistics is non-existent. Since we live in a society whose public discourse i.e. politics, is ever more dominated by statistics, there is a simple conclusion: most of us have little or no understanding of the principles and values which underpin modern society.

Errors in estimating probability or misunderstanding samples, opinion polls and so on, are probably a big part of irrationality, but I felt that they are so distinct from the psychological biases discussed above, that they almost require a separate volume, or a separate ‘part’ of this volume.

Briefly, common statistical mistakes are:

- too small a sample size

- biased sample

- not understanding that any combination of probabilities is less likely than either on their own, which requires an understanding of base rate or a priori probability

- the law of large numbers – the more a probabilistic event takes place, the more likely the result will move towards the theoretical probability

- be aware of the law of regression to the mean

- be aware of the law of large numbers

Gambling

My suggestion that mistakes in handling statistics are not really the same as unconscious cognitive biases, applies even more to the world of gambling. Gambling is a highly specialised and advanced form of probability applied to games. The subject has been pored over by very clever people for centuries. It’s not a question of a few general principles, this is a vast, book-length subject in its own right. A practical point that emerges from Sutherland’s examples is:

- always work out the expected value of a bet i.e. the amount to be won times the probability of winning it

The two-by-two box

It’s taken me some time to understand this principle which is given in both Paulos and Sutherland.

When two elements with a yes/no result are combined, people tend to look at the most striking correlation and fixate on it. The only way to avoid the false conclusions that follow from that is to draw a 2 x 2 box and work through the figures.

Here is a table of 1,000 women who had a mammogram because their doctors thought they had symptoms of breast cancer.

| Women with cancer | Women with no cancer | Total | |

| Women with positive mammography | 74 | 110 | 184 |

| Women with negative mammography | 6 | 810 | 816 |

| 80 | 920 | 1000 |

Bearing in mind that a conditional probability is saying that if X and Y are linked, then the chances of X, if Y, are so and so – i.e. the probability of X is conditional on the probability of Y – this table allows us to work out the following conditional probabilities:

1. The probability of getting a positive mammogram or test result, if you do actually have cancer, is 74 out of 80 = .92 (out of the 80 women with cancer, 74 were picked up by the test)

2. The probability of getting a negative mammogram or test result and not having cancer, is 810 out of 920 = .88

3. The probability of having cancer if you test positive, is 74 out of 184 = .40

4. The probability of having cancer if you test negative, is 6 out of 816 = .01

So 92% of women of women with cancer were picked up by the test. BUT Sutherland quotes a study which showed that a shocking 95% of doctors thought that this figure – 92% – was also the probability of a patient who tested positive having the disease. By far the majority of US doctors thought that, if you tested positive, you had a 92% chance of having cancer. They fixated on the 92% figure and transposed it from one outcome to the other, confusing the two. But this is wrong. The probability of a woman testing positive actually having cancer is given in conclusion 3: 74 out of 184 = 40%. This is because 110 out of the total 184 women tested positive, but did not have cancer.

So if a woman tested positive for breast cancer, the chances of her actually having it are 40%, not 92%. Quite a big difference (and quite an indictment of the test, by the way). And yet 95% of doctors thought that if a woman tested positive she had a 92% likelihood of having cancer.

Sutherland goes on to quote a long list of other situations where doctors and others have comprehensively misinterpreted the results of studies like this, with sometimes very negative consequences.

The moral of the story is if you want to determine whether one event is associated with another, never attempt to keep the co-occurrence of events in your head. It’s just too complicated. Maintain a written tally of the four possible outcomes and refer to these.

Deep causes

Sutherland concludes the book by speculating that all the hundred or so types of irrationality he has documented can be attributed to five fundamental causes:

- Evolution We evolved to make snap decisions, we are brilliant at processing visual information and responding before we’re even aware of it. Conscious thought is slower, and the conscious application of statistics, probability, regression analysis and so on, is slowest of all. Most people never acquire it.

- Brain structure As soon as we start perceiving, learning and remembering the world around us our brain cells make connections. The more the experience is repeated, the stronger the connections become. Routines and ruts form, which are hard to budge.

- Heuristics Everyone develops mental short-cuts, techniques to help make quick decisions. Not many people bother with the laborious statistical techniques for assessing relative benefits which Sutherland describes.

- Failure to use elementary probability and elementary statistics Ignorance is another way of describing this, mass ignorance. Sutherland (being an academic) blames the education system. I, being a pessimist, attribute it to basic human nature. Lots of people just are lazy, lots of people just are stupid, lots of people just are incurious.

- Self-serving bias In countless ways people are self-centred, overvalue their judgement and intelligence, overvalue the beliefs of their in-group, refuse to accept it when they’re wrong, refuse to make a fool of themselves in front of others by confessing error or pointing out errors in others (especially the boss) and so on.

I would add two more:

Suggestibility

Humans are just tremendously suggestible. Say a bunch of positive words to test subjects, then ask them questions on an unrelated topic: they’ll answer positively. Take a different representative sample of subjects and run a bunch of negative words past them, then ask them the same unrelated questions, and their answers will be measurably more negative. Everyone is easily suggestible.

Ask subjects how they get a party started and they will talk and behave in an extrovert manner to the questioner. Ask them how they cope with feeling shy and ill at ease at parties, and they will tend to act shy and speak quieter. Same people, but their thought patterns have been completely determined by the questions asked: the initial terms or anchor defines the ensuing conversation.

In one experiment a set of subjects were shown one photo of a car crash. Half were asked to describe what they think happened when one car hit another; the other half were asked to describe what they thought happened when one car smashed into the other. The ones given the word ‘smashed’ gave much more melodramatic accounts. Followed up a week later, the subjects were asked to describe what they remembered of the photo. The subjects given the word ‘hit’ fairly accurately described it, whereas the subjects given the word ‘smashed’ invented all kinds of details, like a sea of broken glass around the vehicles which simply wasn’t there, which their imaginations had invented, all at the prompting of one word.

Many of the experiments Sutherland quotes demonstrate what you might call higher-level biases: but underlying many of them is this simple-or-garden observation: that people are tremendously easily swayed, by both external and internal causes, away from the line of cold logic.

Anthropomorphism

Another big underlying cause is anthropomorphism, namely the attribution of human characteristics to objects, events, chances, odds and so on. In other words, people really struggle to accept the high incidence of random accidents. Almost everyone attributes a purpose or intention to almost everything that happens. This means our perceptions of almost everything in life are skewed from the start.

During the war Londoners devised innumerable theories about the pattern of German bombing. After the war, when Luftwaffe records were analysed, it showed the bombing was more or less at random.

The human desire to make sense of things – to see patterns where none exists or to concoct theories… can lead people badly astray. (p.267)

Suspending judgement is about the last thing people are capable of. People are extremely uneasy if things are left unexplained. Most people rush to judgement like water into a sinking ship.

Cures

- keep an open mind

- reach a conclusion only after reviewing all the possible evidence

- it is a sign of strength to change one’s mind

- seek out evidence which disproves your beliefs

- do not ignore or distort evidence which disproves your beliefs

- never make decisions in a hurry or under stress

- where the evidence points to no obvious decision, don’t take one

- learn basic statistics and probability

- substitute mathematical methods (cost-benefit analysis, regression analysis, utility theory) for intuition and subjective judgement

Comments on the book

Out of date

Irrationality was first published in 1992 and this makes the book dated in several ways (maybe this is why the first paperback edition was published by upmarket mass publisher Penguin, whereas the most recent edition was published by the considerably more niche publisher, Pinter & Martin).

In the chapter about irrational business behaviour Sutherland quotes quite a few examples from the 1970s and the oil crisis of 1974. These and other examples – such as the long passage about how inefficient the civil service was in the early 1970s – feel incredibly dated now.

And the whole thing was conceived, researched and written before there was an internet or any of the digital technology we take for granted nowadays. Can’t help wondering whether the digital age has solved, or merely added to the long list of biases, prejudices and faulty thinking which Sutherland catalogues, and what errors of reason have emerged specific to our fabulous digital technology.

On the other hand, out of date though the book in many ways is, it’s surprising to see how some hot button issues haven’t changed at all. In the passage about the Prisoners’ Dilemma, Sutherland takes as a real life example the problem the nations of the world were having in 1992 in agreeing to cut back carbon dioxide emissions. Sound familiar? He states that the single biggest factor undermining international co-operation against climate change was America’s refusal to sign global treaties to limit global warming. In 1992! Plus ça change.

Grumpy

The books also has passages where Sutherland gives his personal opinions about things and some of these sound more like the grousing of a grumpy old man than anything based on evidence.

Thus Sutherland whole-heartedly disapproves of ‘American’ health fads, dismisses health foods as masochistic fashion and is particularly scathing about jogging.

He thinks ‘fashion’ in any sphere of life is ludicrously irrational. He is dismissive of doctors as a profession, who he accuses of rejecting statistical evidence, refusing to share information with patients, and wildly over-estimating their own diagnostic abilities.

Sutherland thinks the publishers of learned scientific journals are more interested in making money out of scientists than in ‘forwarding the progress of science’ (p.185).

He thinks the higher average pay that university graduates tend to get is unrelated to their attendance at university and more to do with having well connected middle- and upper-middle-class parents, and thus considers the efforts of successive Education Secretaries to introduce student loans to be unscientific and innumerate (p.186).

Surprisingly, he criticises Which consumer magazine for using too small samples in its testing (p.215).

In an extended passage he summarises Leslie Chapman’s blistering (and very out of date) critique of the civil service, Your Disobedient Servant published in 1978 (pp.69-75).

Sutherland really has it in for psychoanalysis, which he accuses of all sorts of irrational thinking such as projecting, false association, refusal to investigate negative instances, failing to take into account the likelihood that the patient would have improved anyway, and so on. Half-way through the book he gives a thumbnail summary:

Self-deceit exists on a massive scale: Freud was right about that. Where he went wrong was in attributing it all to the libido, the underlying sex drive. (p.197)

In other words, the book is liberally sprinkled with Sutherland’s own grumpy personal opinions, which sometimes risk giving it a crankish feel.

Against stupidity the gods themselves contend in vain

Neither this nor John Allen Paulos’s books take into account the obvious fact that lots of people are, how shall we put it, of low educational achievement. They begin with poor genetic material, are raised in families where no-one cares about education, are let down by poor schools, and are excluded or otherwise demotivated by the whole educational experience, with the result that :

- the average reading age in the UK is 9

- about one in five Britons (over ten million) are functionally illiterate, and probably about the same rate innumerate

His book, like all books of this type, is targeted at a relatively small proportion of the population, the well-educated professional classes. Most people aren’t like that. You want proof? Trump. Brexit. Boris Johnson landslide.

Trying to keep those cognitive errors at bay (otherwise known as The Witch by Pieter Bruegel the Elder)

Reviews of other science books

Chemistry

Cosmology

- The Perfect Theory by Pedro G. Ferreira (2014)

- The Book of Universes by John D. Barrow (2011)

- The Origin Of The Universe: To the Edge of Space and Time by John D. Barrow (1994)

- The Last Three Minutes: Conjectures about the Ultimate Fate of the Universe by Paul Davies (1994)

- A Brief History of Time: From the Big Bang to Black Holes by Stephen Hawking (1988)

- The Black Cloud by Fred Hoyle (1957)

The Environment

- The Sixth Extinction: An Unnatural History by Elizabeth Kolbert (2014)

- The Sixth Extinction by Richard Leakey and Roger Lewin (1995)

Genetics and life

- Life At The Speed of Light: From the Double Helix to the Dawn of Digital Life by J. Craig Venter (2013)

- What Is Life? How Chemistry Becomes Biology by Addy Pross (2012)

- Seven Clues to the Origin of Life by A.G. Cairns-Smith (1985)

- The Double Helix by James Watson (1968)

Human evolution

Maths

- Alex’s Adventures in Numberland by Alex Bellos (2010)

- Nature’s Numbers: Discovering Order and Pattern in the Universe by Ian Stewart (1995)

- Innumeracy: Mathematical Illiteracy and Its Consequences by John Allen Paulos (1988)

- A Mathematician Reads the Newspaper: Making Sense of the Numbers in the Headlines by John Allen Paulos (1995)